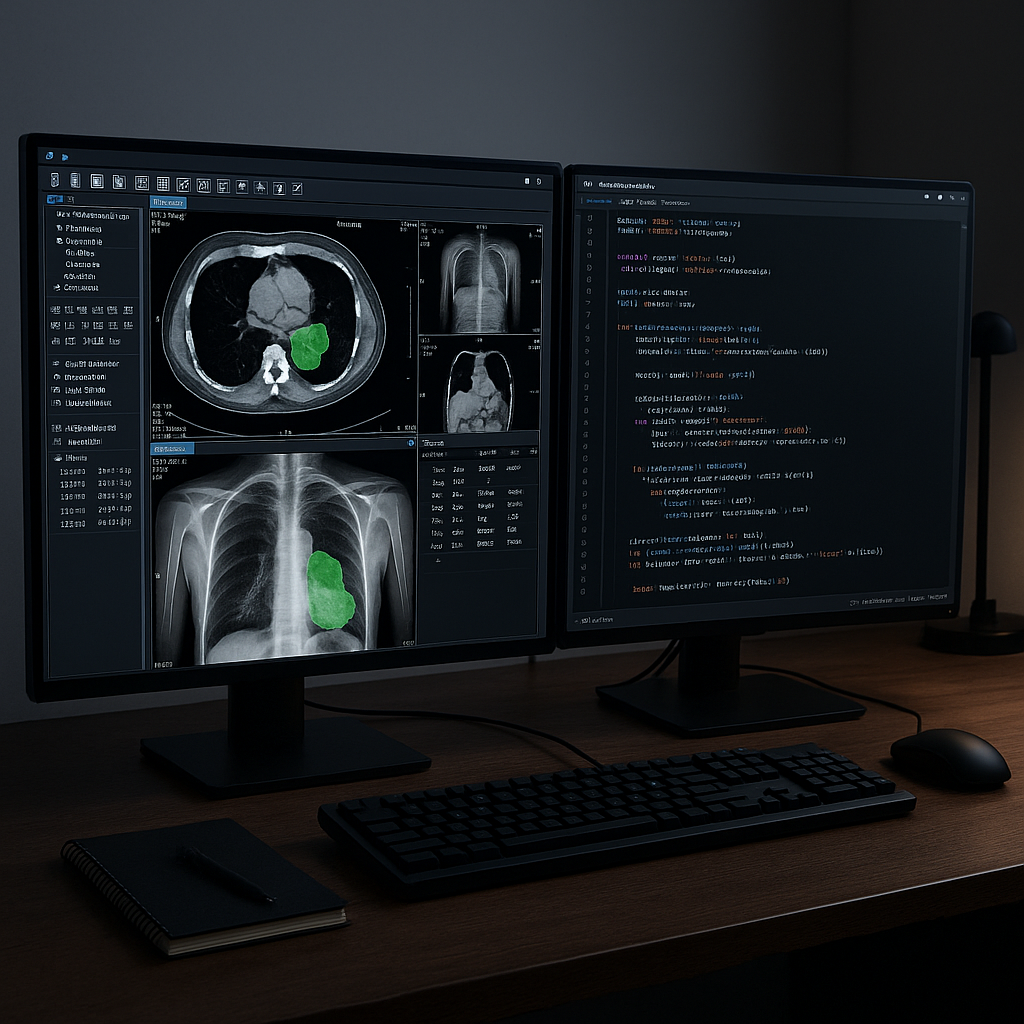

Here’s a sobering reality check for health AI:

A leading medical AI company recently discovered that 40% of their training data contained mislabeled X-rays.

The cost? Six months of lost development time, $2 million wasted, and a delayed FDA submission.

This isn’t an isolated incident. While healthcare generates massive amounts of data daily, the uncomfortable truth remains: quantity doesn’t equal quality.

Poor data foundations — incomplete patient records, unverified imaging sources, demographic blind spots, and inconsistent data standards — are silently sabotaging AI projects across the industry.

At NexClinAI, we’ve seen this challenge firsthand through our work with healthcare AI teams globally. That’s why we built our entire data curation process around a core principle:

AI is only as reliable as the data that trains it.

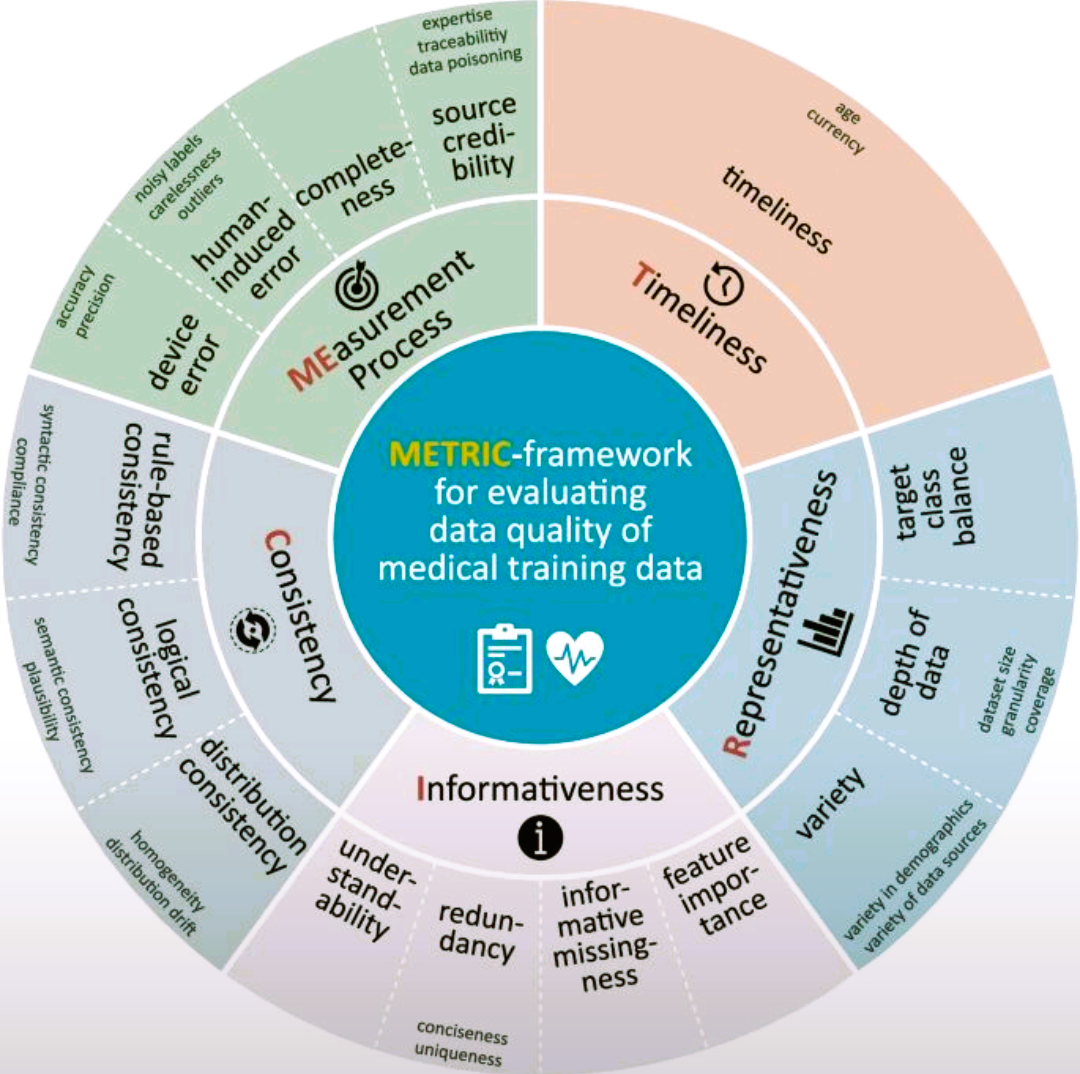

The METRIC Framework: Our North Star for Data Quality

After assessing multiple data quality methodologies, we adopted the METRIC Framework — a structured approach originally developed for healthcare AI validation that directly addresses the challenges our clients face.

Think of METRIC as a comprehensive health check-up for your training data:

Measurement Process:

The Reality: Medical devices can fail, radiologists have off days, and electronic health records contain human errors.

Our Approach: We trace every data point back to its source, verify imaging protocols, and flag potential acquisition errors before they enter your training pipeline.

Timeliness:

The Reality: Medical practices evolve rapidly. COVID-19 taught us that yesterday’s “gold standard” can become obsolete overnight.

Our Approach: We continuously refresh datasets and retire outdated samples to ensure your models reflect current clinical realities.

Representativeness:

The Reality: A model trained predominantly on data from one demographic can fail catastrophically when deployed globally.

Our Approach: We source data from diverse hospital networks and populations, ensuring your AI works for patients across demographics.

Informativeness:

The Reality: Not all medical images are created equal. A blurry chest X-ray teaches your model nothing useful.

Our Approach: We curate datasets rich in diagnostic value, complete with relevant clinical context, and free from redundant noise.

Consistency:

The Reality: Different hospitals use different imaging protocols, data formats, and terminology.

Our Approach: We standardize and harmonize data formats while preserving clinical meaning, creating seamless training experiences.

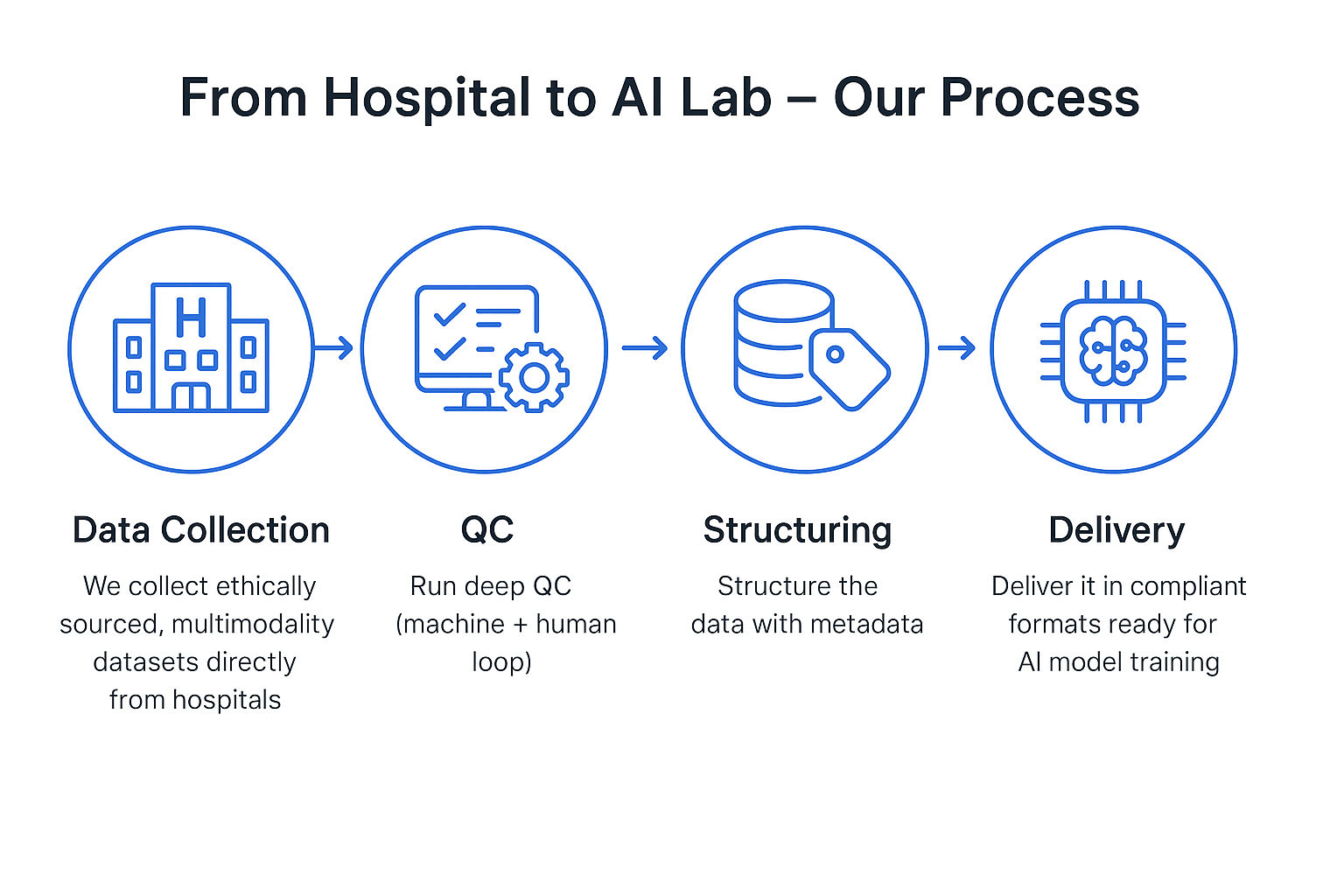

How NexClinAI Puts METRIC into Action

Source Credibility Isn’t Negotiable:

Every dataset in our catalog comes from licensed healthcare institutions with full legal documentation.

No web scraping, no gray-area acquisitions — just transparent, traceable data partnerships.

Quality Assurance Beyond Automation:

While many rely solely on automated checks, our QC workflows include manual reviews and validations to catch dataset inconsistencies and edge cases that could derail model performance.

Privacy-First, Compliance-Always:

We don’t just meet regulatory standards like HIPAA, GDPR, and India’s DPDP Act — we exceed them.

Because one compliance failure can shut down years of AI development progress.

Living Datasets, Not Static Archives

Medical knowledge evolves. So do our datasets. We regularly retire outdated samples and introduce new cases that reflect current clinical practices.

Why Data Quality Matters Beyond Technical Details

For Healthcare AI Teams

You’re not just building algorithms — you’re influencing patient lives.

Biased or inaccurate training data doesn’t just cause model failures; it can perpetuate healthcare disparities and lead to missed diagnoses.

For Regulatory Success

Regulators like the FDA and CE Mark reviewers are increasingly scrutinizing training data quality.

Clean, well-documented datasets aren’t optional — they’re essential for regulatory approval.

For Business Sustainability

Poor data quality is expensive.

Every month spent debugging data issues is a month your competitors are moving ahead.

Ready to Build AI You Can Defend?

We’re not saying data curation is glamorous — it’s meticulous, sometimes tedious, and often invisible when done right.

But it’s the foundation that determines whether your AI becomes a clinical game-changer or just another cautionary tale.

👉 Schedule a Technical Discussion — Bring your toughest data challenges. No sales pitch, just honest problem-solving.

👉 Explore Our Dataset Portfolio — See examples of METRIC-compliant medical datasets.

For the latest insights on medical data quality, AI in healthcare, and compliance strategies:

Follow NexClinAI on 👉 Linkedin

Let’s shape the future of health AI — together.

Sigrid Berge van Rooijen — View Original post on LinkedIn